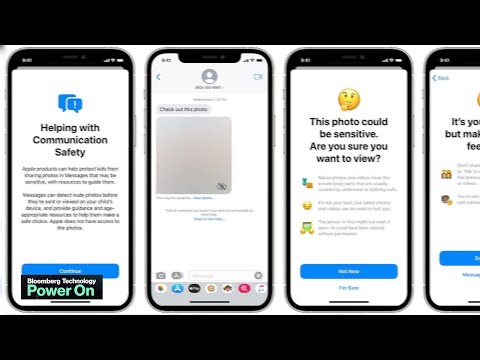

Apple is continuing its parental controls feature in the Messages app for iOS 15.2, which detects nudity. However, parents will have to enable the feature themselves.

Apple iOS 15.2 can detect nude photos

When Apple first unveiled its child protection features, they were met with a fairly critical response, resulting in a delay to the planned rollout. The biggest privacy concern – Apple scanning iCloud photos for child sexual abuse material (CSAM) – is still on hold, but according to Bloomberg, the update to Messages is scheduled for release with iOS 15.2. However, Apple says it won’t be turned on by default and that the image analysis will happen on-device, so it won’t have access to potentially sensitive material.

According to Apple, once enabled, the feature will use on-device machine learning to detect whether photos sent or received in Messages contain explicit material. This will blur potentially explicit incoming images and warn the child or provide a warning if they are sending something that may be explicit.

In both cases, the child also has the option to contact a parent and tell them what's going on. In a FAQ, Apple states that for child accounts 12 and under, the child is warned that a parent will be contacted if they view/send explicit material. For child accounts between the ages of 13 and 17, the child is warned of the potential risk, but parents are not contacted.